|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

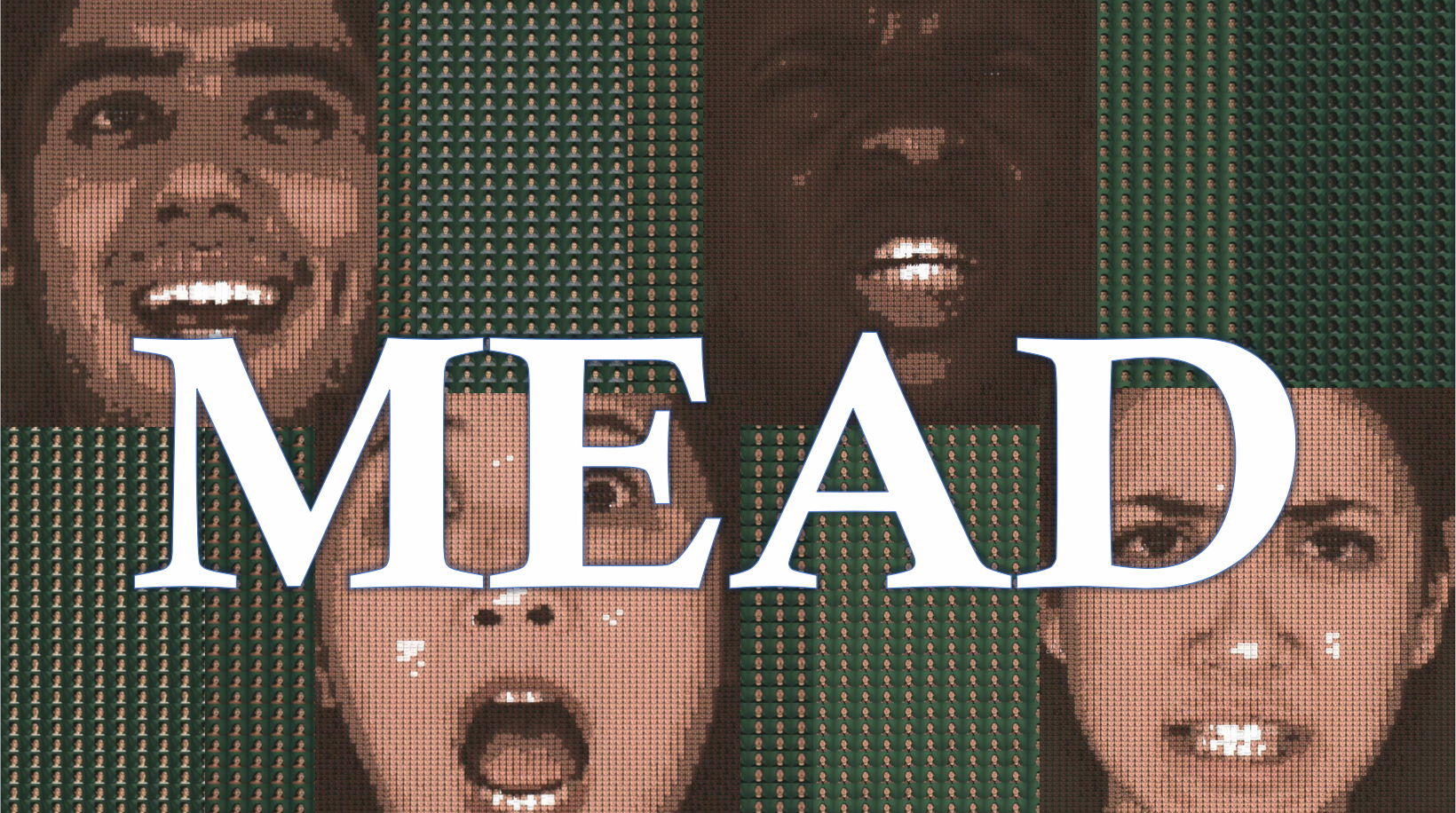

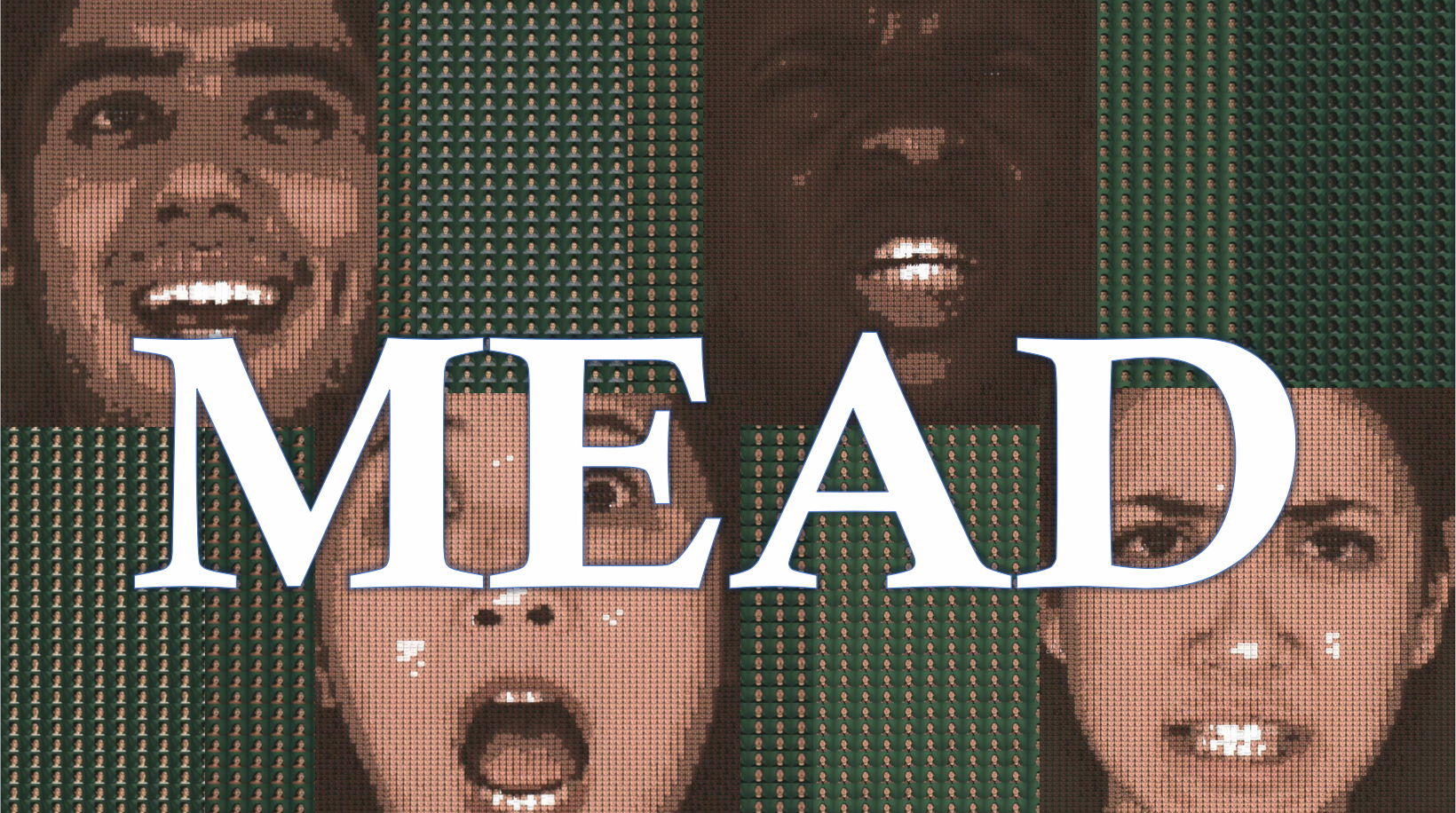

The synthesis of natural emotional reactions is an essential criterion in vivid talking-face video generation. This criterion is nevertheless seldom taken into consideration in previous works due to the absence of a large-scale, high-quality emotional audio-visual dataset. To address this issue, we build the Multi-view Emotional Audio-visual Dataset (MEAD), a talking-face video corpus featuring 60 actors and actresses talking with eight different emotions at three different intensity levels. High-quality audio-visual clips are captured at seven different view angles in a strictly-controlled environment. Together with the dataset, we release an emotional talking-face generation baseline that enables the manipulation of both emotion and its intensity. Our dataset could benefit a number of different research fields including conditional generation, cross-modal understanding and expression recognition.

Acknowledgements |